Staying on top of competitors' prices allows quick adjustments to protect revenue. Some of the major eCommerce companies reportedly make over 2.5 million price changes per day to stay ahead. These examples show that real-time insight into competitors' pricing is now essential for maintaining a competitive edge.

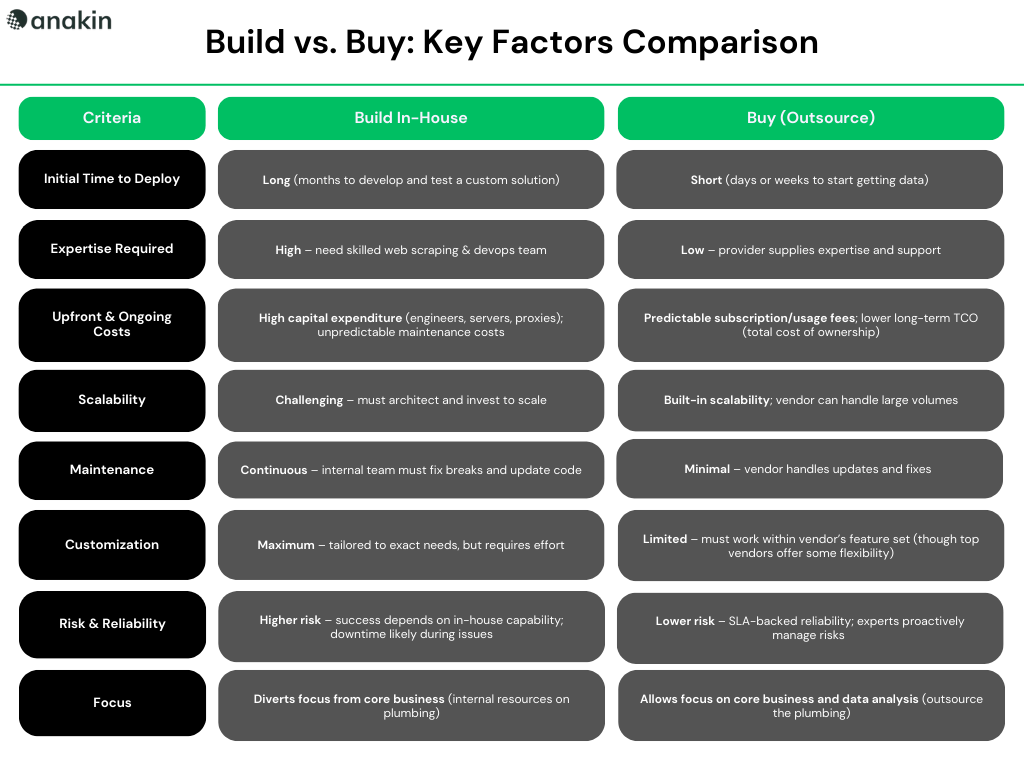

In today's fast-moving markets, competitive pricing intelligence is crucial for adjusting strategy and protecting market share. Companies need timely, accurate pricing data to respond effectively to competitors' actions and market trends. However, gathering such data at scale poses significant challenges and requires careful consideration of available resources and capabilities. This leads to a dilemma: build an in-house web scraping system to gather pricing data or buy a ready-made solution.

Let's dive deep into building an in-house web scraping solution vs. buying a Competition Intelligence Solution to decode the best possible solution for your business and understand how to optimise cost, time, and quality for effective data extraction.

Scoping involves clearly defining project priorities and constraints, simplifying decision-making while establishing a framework for successful implementation.

For web data extraction projects, three critical dimensions must be carefully balanced:

Refers to the accuracy, completeness, and reliability of the extracted data, which depend heavily on the choice of scraping tools, the expertise of developers, and the effectiveness of data processing methods. Ensuring high-quality data requires continuous validation, monitoring, and timely adjustments to maintain consistency and usability.

This refers to how long your internal team will need to develop, launch, and operationalize version 1.0 to start providing usable data. Accurately assessing this timeframe is crucial when deciding whether to buy or build. Additionally, consider the required frequency and intensity of data extraction, as these factors significantly influence the complexity and duration of the development process.

Includes scraping tools, developer expertise, and infrastructure like hosting and storage. Additionally, ongoing maintenance, system updates, and addressing unexpected technical issues can also contribute significantly to overall expenses.

A clear project scope for speed, quality, and cost efficiency, guiding investment decisions and extraction method selection can be hugely beneficial in achieving the desired results.

Developing an in-house solution provides extensive customization, enabling businesses to design tools tailored precisely to their unique requirements. However, this approach typically demands considerable resources. Here are a few challenges that need to be considered:

Technical Complexity: Building a scalable, reliable scraper infrastructure is technically arduous. It's not just about parsing HTML; you must handle anti-scraping defenses like IP bans, CAPTCHAs, dynamic content loading, and frequent site structure changes. In-house teams must provision servers and networks capable of crawling at scale, often needing a pool of rotating proxy IPs to avoid getting blocked.

High Development Costs: Creating a custom scraper requires skilled staff and constant infrastructure upkeep. The effort and costs add up quickly, often exceeding initial budget projections as complexity increases.

Long Time to Market: An in-house tool might take months or years to fully implement. In a fast-paced environment, that delay means several missed opportunities and potential competitive disadvantages.

Outcome Uncertainty: The in-house tool may or may not meet the standards and requirements of the business, creating risk in both investment and implementation outcomes.

Maintenance Burden: A significant amount of management and business time must be invested in maintaining the tool—resources that could otherwise be directed toward core business activities and strategic initiatives.

Purchasing a commercial web scraping service bypasses these challenges and offers key advantages:

Speed & Cost-Effectiveness: Third-party solutions are ready to deploy, so you get data immediately. Pay-as-you-go pricing means you only pay for what you need – often much less than building in-house.

Robust & Scalable Service: Vendors handle the heavy lifting – scaling infrastructure, overcoming anti-scraping measures, and ensuring compliance – delivering reliable data with minimal effort. The result is higher success rates and data quality. For instance, one enterprise that outsourced to a leading provider was able to run 100+ million scrape requests per month at a 99.9% success rate.

Rapid Speed to Market: Perhaps the biggest advantage of purchasing a ready-made solution is instant deployment. Instead of spending months coding and testing an internal tool, a vendor solution allows you to start collecting data almost immediately. Depending on the choice of partner, you can go live in as early as just a couple of weeks.

No Maintenance Burden: Purchasing a solution effectively outsources continuous engineering updates and maintenance responsibilities to the vendor. Their expert team ensures reliable and consistent data delivery by adapting scrapers to website changes, promptly addressing scraper issues, and proactively updating systems to counter emerging anti-bot measures.

Consider these factors when deciding:

While an in-house build offers control, buying is the smarter choice. Faster deployment and lower maintenance needs outweigh the benefits of a DIY approach. Many firms find that top data providers deliver quality results at a lower cost than internal teams. To stay ahead on pricing, buying a solution provides a clear strategic advantage. A dedicated partner specializing in web extraction will enhance value across all key decision factors outlined above while eliminating the burden on management bandwidth.

Take charge of your data-driven future today. At Anakin, we're here to provide the support and data you need to thrive in a rapidly evolving marketplace. If you have any questions or want to discuss your project in more detail, don't hesitate to contact us.

Written by Anakin Team