Data is central to every critical business decision, informing product development, marketing strategies, and long-term growth plans. Yet the worth of that data hinges on one factor: quality. Incomplete or inaccurate data can lead to wasted resources and missed market opportunities. For those engaging in web scraping and automated data collection, this risk is especially high. That is why maintaining data quality must be a top priority from day one.

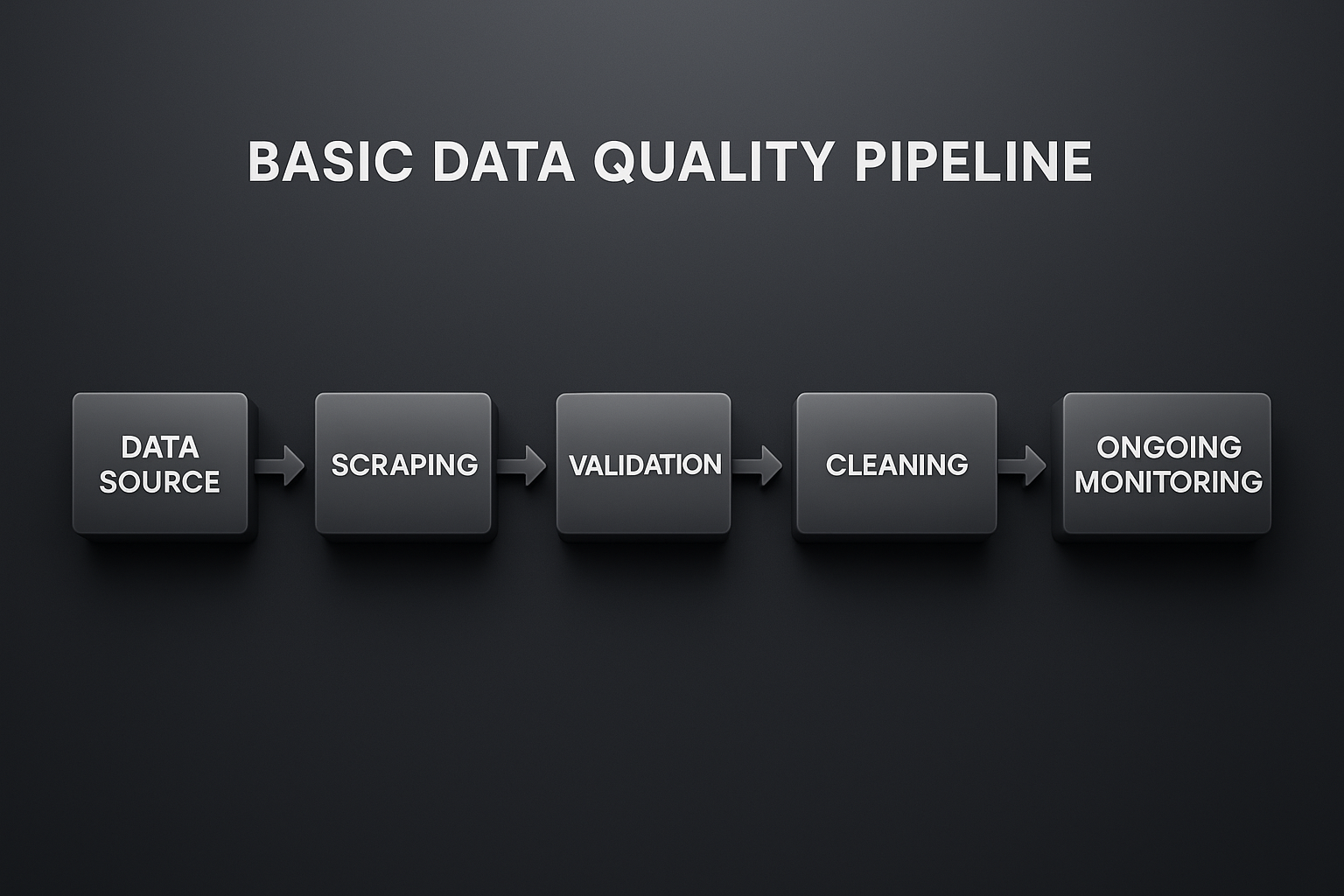

This guide explores best practices for ensuring high-quality data in web scraping efforts. It also delves into more advanced strategies, such as machine learning–driven anomaly detection and continuous monitoring systems, to elevate your data quality workflow.

High-quality data yields better insights. Clean and accurate datasets can uncover meaningful trends, reveal shifting customer preferences, and highlight unexpected changes in your market. Conversely, errors, duplications, or missing records can derail even the most carefully planned initiatives.

The temptation to "collect all data possible" remains a common trap. Large volumes of unstructured or poorly validated data can obscure the most critical insights, making it difficult to discern relevant patterns. The real challenge lies in balancing volume and quality so you have enough data to see trends, but not so much that you drown in noise.

Before you scrape, define specific goals:

Establishing these details keeps your focus on collecting the right data. It also sets the stage for your validation and cleaning efforts. For instance, if your goal is to track competitor pricing on an hourly basis or every 24 hours, your data must include exact timestamps, product identifiers, and current price fields—no exceptions.

Well-structured data is easier to clean, merge, and analyze. Use uniform file formats, databases, or cloud storage solutions that maintain consistent data types. Standardize timestamps, currency fields, and naming conventions. For example:

Early consistency helps identify outliers and anomalies. If you always expect price_usd to be a number, spotting erroneous text values (e.g., "contact for price") becomes straightforward, an early clue that your scraping logic may need adjustments.

Confirm that URLs are valid and accessible before initiating data collection processes.

Leverage both simple rules and advanced anomaly detection algorithms. For instance, if prices normally range between $10-$50, a jump to $500 could indicate an error or market signal.

Implement automated alerts for when key CSS selectors or API endpoints fail, ensuring your data pipeline remains robust as website structures change.

Below is an updated table showing both typical issues and more advanced fixes:

IssueExampleImpactPotential SolutionDuplicate RecordsSame SKU captured multiple times from various pagesInflated counts, skewed analyticsDe-duplicate using a unique identifier. Implement hashing to detect repeats.Formatting ErrorsDates mixed between YYYY-MM-DD and DD-MM-YYYYDifficulty in sorting, merging, or visualizing dataUse date-parsing libraries to standardize formats at ingestion.Missing ValuesBlank price or description fieldsIncomplete insights, biased analysisCreate a re-scrape queue for missing fields. Use placeholder checks.Incorrect FieldsScraping the wrong HTML elementMisleading analysis, wasted effortImplement continuous QA checks, use multiple selectors, and cross-verify sourcesSuspicious OutliersDaily prices are suddenly jumping 10x the normIt could be a real anomaly or a scraping failureTrain a simple ML model or use statistical thresholds to flag unusual data.

Traditional validation rules (e.g., "reject if blank") can catch obvious issues. But advanced algorithms can spot patterns too subtle for rigid rules. For instance, time series models can learn typical pricing fluctuations and highlight atypical spikes or drops.

This approach helps you identify not only errors but also genuine market shifts faster than competitors.

Automation reduces manual intervention and frees up resources. Consider:

Monitoring should be ongoing, especially for sites that change often. Add version control to track your data schema. If a field changes meaning or a site updates its structure, document the revision to maintain data lineage.

If you outsource your scraping or integrate with competitor intelligence platforms, choose providers who emphasize end-to-end quality. At Anakin, for example, robust validation and anomaly detection are woven into every step, from initial extraction to final reporting. This ensures that clients receive data they can trust for informed decision-making.

Achieving superior data quality in web scraping requires more than basic checklists. By combining clear objectives, rigorous validation, consistent data structuring, and advanced anomaly detection, you build a powerful system that continually refines its accuracy. This holistic approach not only saves time and money but also uncovers valuable insights faster, giving you a real competitive edge.

In a market where information is the most potent resource, investing in refined data quality processes pays off. Balancing depth, rigor, and adaptability ensures that your data remains a reliable guide for strategic decisions today and into the future.

Written by Anakin Analytics Team